Neural style transfer

AI Art Generation Explained

Neural style transfer

If you’ve ever wondered how AI Image generation works, this post is for you. While many people use AI image generators to create images for work, hobbies, and for fun, understanding how it works can be a totally foreign concept. One of our team members, Raed-Alshehri, posted a GitHub project about Neural Style Transfer. We’ll walk through the project and put everything into layman’s terms so you can see behind the scenes of AI art generation. We’ve also created a terminology page to help you understand machine learning basics. Click the links throughout this post to learn more about each term.

Raed’s Project Premise

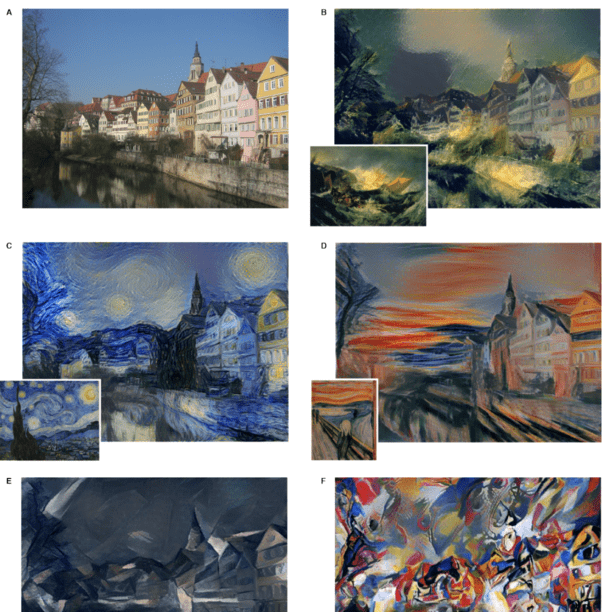

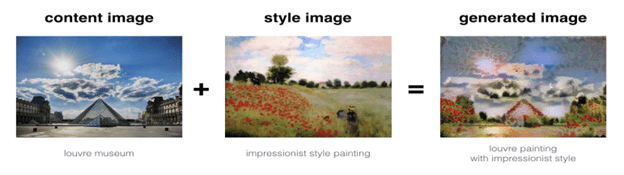

Raed’s project premise is to create a unique digital art piece by blending the iconic Louvre Museum in Paris with the impressionist style of Claude Monet. Specifically, he aims to use the content image C of the Louvre Museum and the style image S of Monet’s impressionist style to generate a final image that combines both elements. The end result will be a visually stunning image that represents the marriage of historical significance and artistic style.

Transfer Learning

- Training the model to use the content image in the content of the generated image.

2. Training the model to use the style image on the style of the generated image.

Raed’s Project Premise

Raed’s project premise is to create a unique digital art piece by blending the iconic Louvre Museum in Paris with the impressionist style of Claude Monet. Specifically, he aims to use the content image C of the Louvre Museum and the style image S of Monet’s impressionist style to generate a final image that combines both elements. The end result will be a visually stunning image that represents the marriage of historical significance and artistic style.

How does Neural Style Transfer Work?

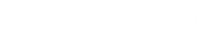

Neural Style Transfer is an innovative algorithm developed by Gatys et al. (2015) that allows for the creation of visually compelling digital art. The algorithm works by using two images: a content image and a style image. The content image provides the subject matter for the final image, while the style image is used to determine the stylistic features of the image. Unlike most optimization algorithms, which optimize a cost function to obtain a set of parameter values, Neural Style Transfer optimizes a cost function to obtain pixel values. By iteratively adjusting the pixel values in the content image, the algorithm gradually transforms the image until it closely matches the style of the style image. This process involves minimizing a loss function that measures the difference between the feature representations of the content image and the style image.

Transfer Learning

Raed’s project begins by utilizing a pre-trained model for image detection as a base. This approach is known as transfer learning, which involves repurposing a model trained on one task for use on a different but related task.

Applying Neural Style Transfer

Neural style transfer will take place in three steps

- Training the model to use the content image in the content of the generated image.

- Training the model to use the style image on the style of the generated image.

- Putting together the two algorithms so they work together to create the ideal generated image.

Training the Model on Content

To create a generated image that looks like the content image, we need to choose a layer in the neural network that helps capture the important details of the original image. A neural network is like a group of tiny decision-makers working together to solve a problem. Each decision-maker is called a neuron and they are organized into layers. When we want to generate an image using a neural network, we need to choose a specific layer of neurons to use. This layer is important because it helps the network understand the important parts of the image we want to generate. Choosing a “middle” activation layer means picking a layer that has learned to detect both the basic and complex features of the image. This layer helps the network generate an image that looks similar to the original input. This layer is where the network has learned to detect both high-level and low-level features of the input data. If we choose a layer that’s too close to the beginning, the generated image might not capture enough high-level details. If we choose a layer that’s too close to the end, the generated image might miss out on some low-level details. This tends to result in images that look more visually pleasing and similar to the original image.

Content Cost

In simple terms, content cost measures the difference between the content image and generated image. When the network is being trained, training data is used to help adjust the content cost to produce the best output. You want the content cost as low as possible at this point. (Later when adding in style, you’ll want to adjust it so it produces a good mix of content and style.)

Training the Model on Style

To train the model on style, there are a few separate steps. Matrices are created to measure different variables. Filters are what’s used to detect features in an image, like sharp lines or soft objects. First, different filters are applied to an image and the output is analyzed. The model measures the frequency of the features detected by each filter. Do they produce similar results? Different results? Second, the model measures how common each filter is. “For example, if a filter is detecting vertical textures in the image, then the matrix shows how common vertical textures are in the image as a whole. Third, you put the two together. The matrix can analyze a photo and detect the prevalence of features, as well as how often each feature occurs with any other feature. This is how you detect style!

Style Cost

Just like with content cost, the AI engineer analyzes the difference between the style image and the generated image in order to compute the style cost. For style cost however, each different layer can be weighted to produce more desirable results.

Training the Model on Both Content & Style

The content cost and style cost are combined into a formula so both are taken into consideration at the same time. Hyperparameters are applied to this formula, meaning that the content and style can be weighted. The engineer can adjust the hyperparameters to produce output that has more or less emphasis on either the content or the style, depending on the desired effect.

Implementation of Neural Style Transfer

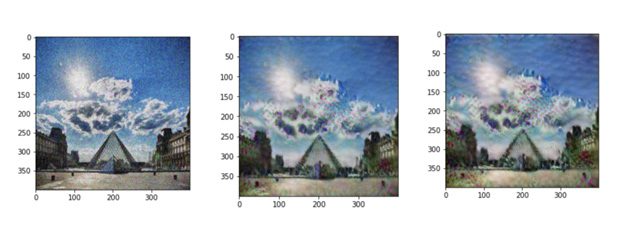

Now, we’ll put it all together and show you what the images look like along the way.

- First, the input images for content and style are loaded.

- The random content image is generated. Then a model is loaded, and the content cost is calculated. By adjusting hyperparameters, a different output can be created.

During training, the network parameters are initialized randomly at the start of training and then updated over the course of multiple epochs to optimize the cost function. Each epoch does not build on top of the previous epoch per se, but rather the parameters learned during one epoch are used as the starting point for the next epoch. The new set of parameters after each epoch is the result of the optimization process that takes into account the updates from the previous epoch.

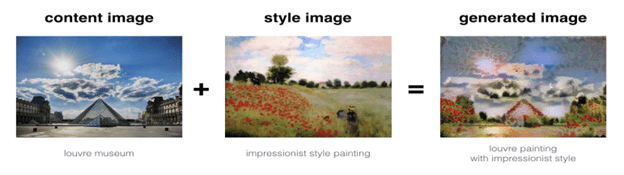

The final product

Here’s what you could expect from the example above. The machine ran 20,000 epochs to create the final generated image.

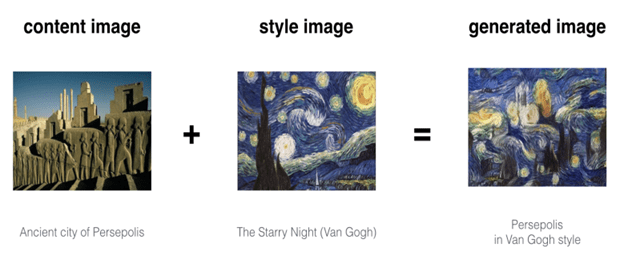

Here are some more examples using the same model:

In Conclusion

We want to thank Raed for his interest in machine learning and for providing an example of neural style transfer for us to work with. If you have a machine learning project you’re looking to build, our talented team at Quant would be happy to chat. Our most popular AI implementation is in real estate (Suhail.ai), and we’re always looking for opportunities to expand our knowledge to novel ideas in different industries.

Share this Article

![]()